An independent evaluation of MT engines performance in the COVID domain

Summary

This study evaluates the performance of machine translation engines—both stock and custom NMT—for COVID-related content.

This evaluation has been conducted with two goals in mind:

1. Evaluate what MT engines work best for COVID-related content in different language pairs.

2. Share our approach to custom NMT training, identifying steps to be taken and potential pitfalls that may occur. Custom NMT training is costly, and solid ROI is essential for everyone undertaking it. With this in mind, we present our methodology, challenges, and factors that influenced this study's outcomes.

The research has been performed on Corona Crisis Corpus made available by TAUS.

The systems used in these reports were trained and used for translation between June 26th and June 30th 2020. They may have changed many times since then. Please remember that each evaluation is unique, and your outcome will depend on the kind and quality of your data, language pairs, domain, and MT engines.

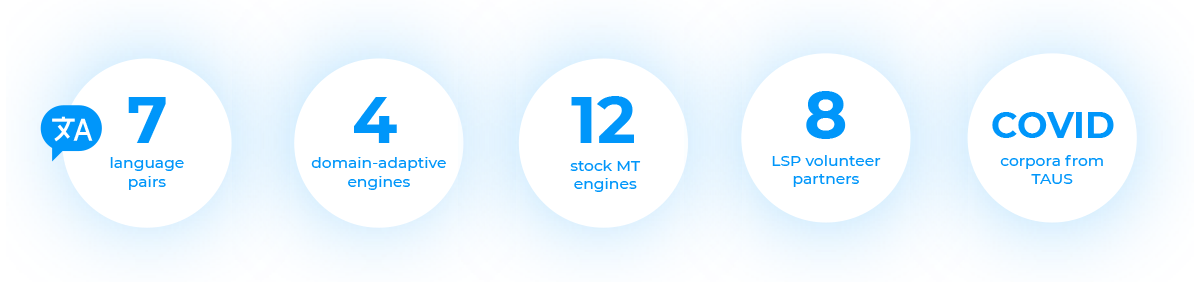

Key facts and numbers

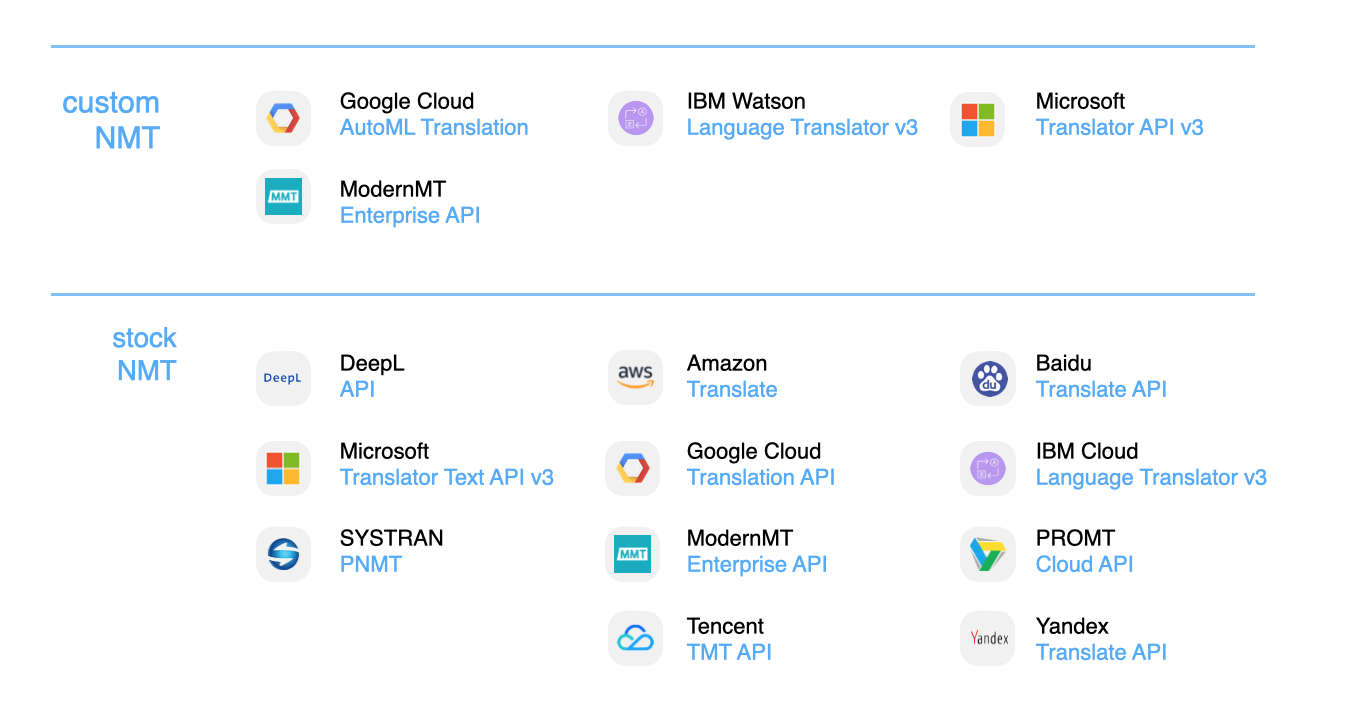

MT Engines assessed

- STOCK NMT include DeepL Translator, Microsoft Translator, SYSTRAN Translate, Amazon Translate, Google Cloud Translation, ModernMT Human-in-the-loop, Tencent Cloud TMT, Baidu Translate, IBM Watson Language Translator, PROMT Cloud, Yandex Cloud Translate.

- CUSTOM NMT include Google Cloud AutoML, ModernMT Human-in-the-loop, IBM Watson Language Translator, Microsoft Translator, Yandex Cloud Translate (for English>Russian only).

- Language support

- Customization enablement

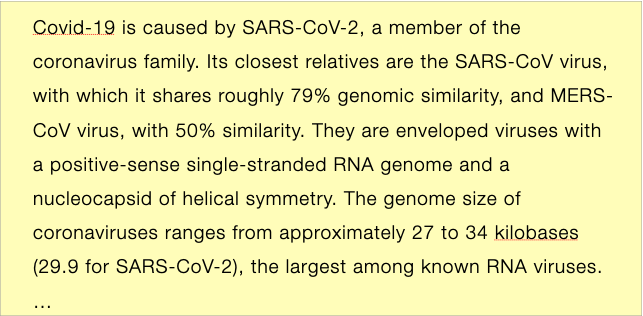

A sample of text that was used in the evaluation

Scores

Corpus-level scores include hLEPOR, TER, SacreBLEU.

Segment-level scores include hLEPOR, TER.

Scores show that the customized models translate much closer to the reference set.

Training and evaluation approach

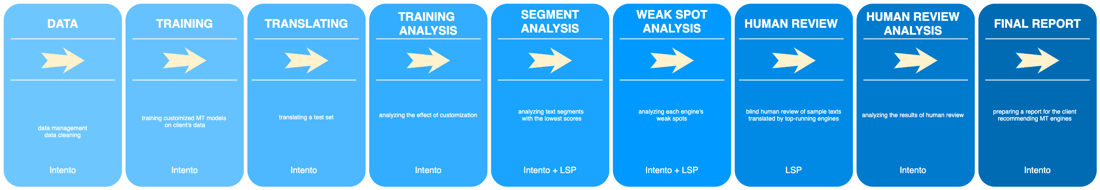

Evaluation workflow

- Clean the data

- Train custom models

- Compare performance to stock models

- Analyze engines’ weak spots

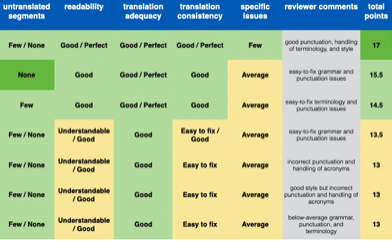

- Human LQA

- Identify the best engine

Stage 1. Cleaning

At this stage, we cleaning large, real-life TMs: from duplicate detection to MTQE/BERT/LASER.

We found that a lot of non-medical texts end up in medical datasets if they contain the words “virus”, “death”, and “healthy”.

This will affects the outcomes down the line.

These are some curious examples:

“When Jesus heard it, he saith to them, They that are in health, have no need of a physician, but they that are sick; I came not to call the righteous, but sinners, to repentance.”

“The virus has infected Skynet.” “I question everything, it's healthy.”

Stage 2. Training

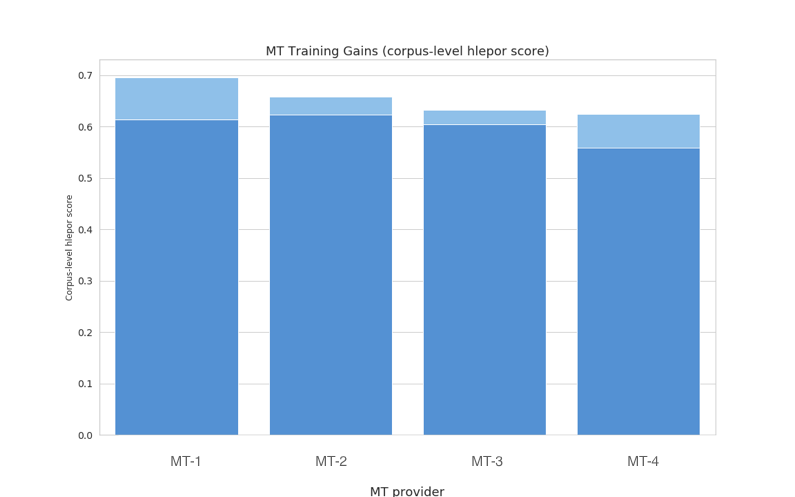

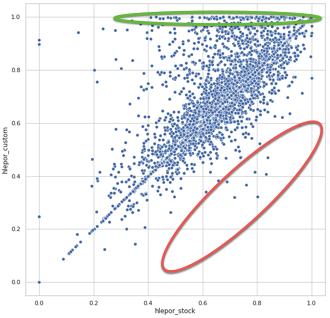

The customized models translate much closer to the reference set; we see lots of score improvements. At the same time customization can lead to omissions or mistranslations in some segments.

Stage 3. Segment and engine filtering

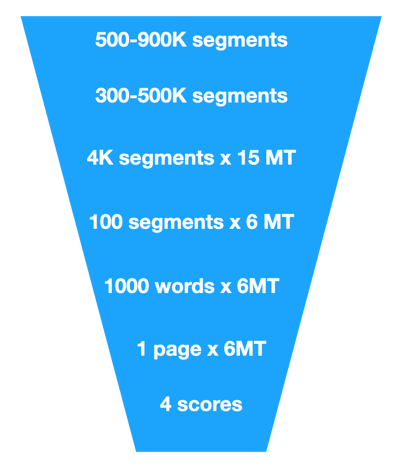

Reviewers have to review 2M => 12K words.

After that, top-running engines are identified.

Then we choosing the most indicative segments.

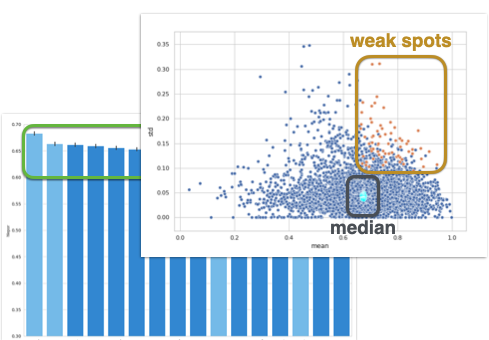

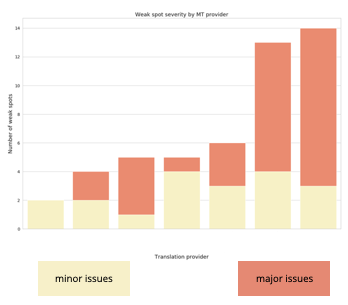

Stage 4. Weak spot analysis

The types of observed issues are:

- Mistranslated words and omissions.

- Untranslated words or entire segments.

More rare and exotic weak spots are:

- Translation into the wrong language (e.g. Traditional Chinese instead of Simplified).

- Using English punctuation when translating into Chinese.

- Omissions typical for custom engines.

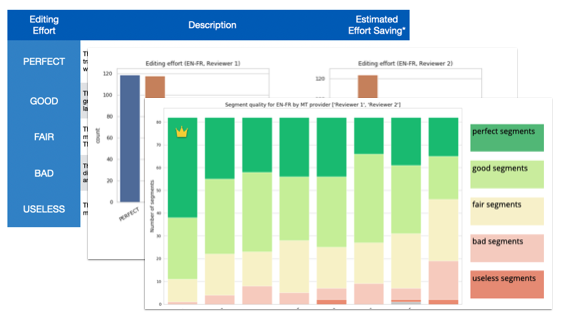

Stage 5. Post-editing

This stage includes:

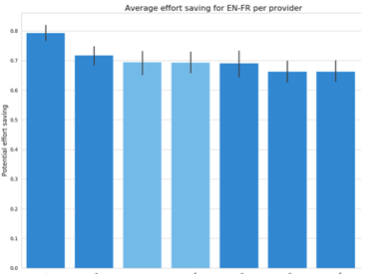

- Measuring effort-saving

- Editing distance

- Effort rating

- Reviewer agreement

- Top MT engines offer effort saving in the range 65-80%

Human Translation was never ranked first, and in two language pairs it came last. Those translations are the most heavily edited because they are too localized or do not exactly match the source, while MTs and translations suggested by reviewers are literal.

Effort saving and editing distance are not always correlated. A GOOD segment can take many edits to make it PERFECT. A gross mistranslation can be corrected with only a couple of edits.

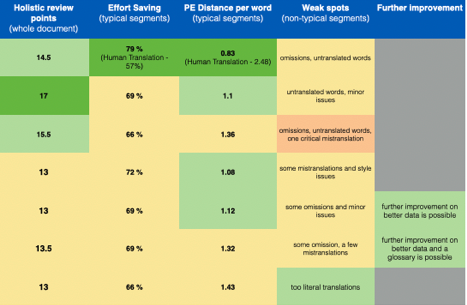

Stage 6. Holistic review

Full document translation.

Challenges: consistency, tag handling.

Translation from Chinese was the toughest for MT engines. The text was bureaucratic, with long, complex sentences. Most translations ended up useless and unreadable, even though the same engines handled test set segments well enough.

Handling abbreviations is hard for many engines: “MERS” (Middle East Respiratory Syndrome) becomes plural “LE MER” in Italian and “DIE MER” in German.

“ARDS” (Acute Respiratory Distress Syndrome) and “MODS” (Multiple Organ Dysfunction Syndrome), which have established abbreviations in target languages, are often left unchanged.

Stage 7. Putting it all together

Custom models did not show explosive growth over stock models. The main reason for that is they became the closest to the reference, while the reference itself didn't rank well.

More domain-specific and homogeneous data is needed to really appreciate the value of trained models.

Glossaries create more challenges than benefits: they ruin good sentence structure and result in grammatical errors, while not necessarily improving the use of the terminology. DNT glossaries are an exception.

Factors that affect custom NMT performance

Positive

Customized engines demonstrate explosive growth in quality compared to stock engines when they are trained on homogeneous, even standardized, data, with a clear format, uniform style, and consistent use of terminology. More standardized text of instructions, guidelines, protocols, product descriptions, etc. works well as training data for custom NMT.

These data features lead to a great NMT performance:

1 A large number of parallel segments that all use the same pattern, e.g. instructions, product descriptions.

2 Consistent translations: patterns in source segments are mapped one-to-one to patterns in target segments.

3 Consistent use of terminology: term “A” in the source language is always translated “B” in the target language, with no variations.

4 Consistent style: style is the same throughout the training data, e. g formal or informal.

5 Consistent domain: all training segments are strictly in the same domain.

Take a look at our complete guide to a

Negative

1 Heterogeneous training data, no clear format, no defined style, a fuzzy domain — these can make training results less impressive.

2 Free and inconsistent reference translations also make training less effective.

3 Mismatched source and target texts: when the target text contains information that the source text doesn’t or vice versa.

4 Wrong language in the source or target text.

5 Target texts are very localized, not literal translations of source texts; many synonyms are used in target texts for the same word in source texts.

Source text like the examples below undermine the performance of NMT engines.

Among the segments below, some have medical terminology, others are vaguely healthcare-related, while others have little to do with medicine or healthcare.

“The study compared the effects of dronabinol (THC) and placebo on colonic motility and sensation in healthy adults.”

“Cindy can not understand why, despite her healthy habits during the week, she was still gaining weight.”

“Our experts can even coach you on Internet safety, social media basics, removing viruses and so much more.”

“Guests also enjoy discounts at the nearby Holmes Place health club.”

The following segment is an example of a re-phrased target translation:

Source: “Similar effects can also result from exposure to chemicals that influence nervous system development, but have no known action on the endocrine system.”

Target: “L'exposition à des substances qui influent sur le développement neurologique, mais n'ont pas d'action connue sur le système endocrinien, peut également provoquer des effets similaires.”

To access the seven reports,

please fill in the form